This is a recording from a live feed. The camera is behind the viewer, who sees on the screen four images of themselves. One is live and the others are delayed, so that it gives the impression of a group of people collaborating. Perhaps speaking to each other. Perhaps handing something to each other.

This setup required one camera and one screen. But in general, video installations which involve Live Video, use more than one screen and often more than one camera.

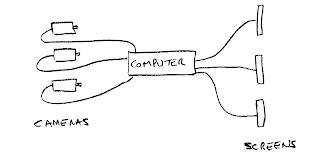

With the above setup, there is a lot you can do with Live Video. Normally you will have one or more cameras attached to a computer and one or more screens. By overlaying various feeds on each other, by delaying feeds and distributing cameras and screens round the gallery, some interesting participatory installations, like the above, can be achieved.

Another, equally simple example, is to again use a single camera and a single screen, so that what shows on the screen is a kaleidoscope of the image from the camera. If the camera is on an umbilical cord, some fascinating patterns can be created

A famous example of an installation using a live camera is from Bruce Nauman in 1971.

|

| A Nauman Corridor, from above |

The second screen also shows something enticing. It is the same image as the first screen but delayed by 5 to 10 seconds. So when you reach the end of the corridor, you can see your own recent history.

This was quite a difficult set up to make in 1971, where the video delay would be made by a tape loop or some other hardware. Short delays like this, are straightforward to achieve with contemporary software. I'll explain how later in this post.

Here are some other examples of Live Video that can be easily set up in a gallery

- Ghosting. Image on screen superimposes current and delayed images, so when you leave, you don't. You stay a while as a ghost and then leave.

- Camera above Screen shows visitors milling around in gallery. Image is delayed by 5 seconds, so when visitor approaches they don't see themselves initially, then they see themselves approaching

- As above, but with two screens and two camera adjacent to each other but about 2 metres apart. Delayed feed from each camera goes to other screen, so if you are quick moving between screens you get to see yourself and your companions, delayed.

- Kaleidescopes of live feed of varying complexity. Familiar hexagonal kaleidescope. Array of 16, rather than 4 as above, but flipped to create continuous image.

- Pixellated images, where the pixellation is more arty than that achieved just by downgrading the pixels. For example, each square pixel replaced by a circle or a sphere.

- Slow/Fast. A like feed which periodically slows and then accelerates the image so that it is sometimes a little behind real time and sometimes a long way behind.

I'll explain how to achieve each of these effects in the following.

This is a simple example of the Ghosting Effect. The visitor sees themselves on the screen. Not interesting. Till they see their ghost arrive a second later. Then they do a double take. By stopping, the ghost catches up. When they leave, the ghost stays a while, then leaves. It's just as easy to set up two ghosts, or three ghosts. Each has its own effect.

The single camera image with longer delay (5 seconds seems about right) mentioned in 2 above has a different effect. This transmutation of time is disconcerting. Even more so if their are two cameras playing onto separate screens as mentioned in 3 above. It takes time to work out what the relationship is between real time and recorded time. I haven't a recording of that to show here yet. I need to record one.

Finally, the Kaleidescope effect, shown in the second example above is interesting enough with 4 replicas. This corresponds to having two mirrors at 90 degrees and is much easier to construct with conventional video software, which is used to chopping rectangular chunks out of video. But the normal Kaleidescope effect that reproduces the kind of kaleidescope that we had as kids, requires the mirrors to be at 60 degrees, so that we get six copies of the image.This is a little harder to construct and I don't have a recording to show for that yet. Check back in a couple of weeks when I should have one.

To describe how these Live Video feeds were constructed I need to use a little bit of maths, so if you are not up for that, you can skip the rest.

I will denote by overH(v1, v2) the video constructed from videos v1 and v2 by laying them side by side horizontally. Similarly, I will denote by overV(v1, v2) the video constructed from videos v1 and v2 by laying them side by side vertically.

Again, flipH(v) is the video obtained by flipping the video v horizontally. And flipV(v) flips v vertically.

So you can see that, if v is the live feed from the camera, overH(v, flipH(v)) would place the feed and its mirror image side by side. Call that v1. Then overV(v1, flipV(v1)) constructs the Kaleidescope effect shown in the second example above.

A couple more operations is all we need to construct all the examples shown here. These would be delay(v) which is the video v, delayed by one second. And over(v1, v2) which places a translucent video v2 on top of video v1. So the ghost effect is just over(v, delay(v)). Simple.

This is simple mathematics, although you might think it looks more like programming. It is pure mathematics because every expression denotes an object. Here, all expressions denote videos. The operations (overH, flipH etc.) combine videos to create videos.

But, while it is pure mathematics, it is also programming. It's what is called functional programming. This is how I designed the scripts before I turned them into real programs to achieve the live effects shown here.

The single camera image with longer delay (5 seconds seems about right) mentioned in 2 above has a different effect. This transmutation of time is disconcerting. Even more so if their are two cameras playing onto separate screens as mentioned in 3 above. It takes time to work out what the relationship is between real time and recorded time. I haven't a recording of that to show here yet. I need to record one.

Finally, the Kaleidescope effect, shown in the second example above is interesting enough with 4 replicas. This corresponds to having two mirrors at 90 degrees and is much easier to construct with conventional video software, which is used to chopping rectangular chunks out of video. But the normal Kaleidescope effect that reproduces the kind of kaleidescope that we had as kids, requires the mirrors to be at 60 degrees, so that we get six copies of the image.This is a little harder to construct and I don't have a recording to show for that yet. Check back in a couple of weeks when I should have one.

To describe how these Live Video feeds were constructed I need to use a little bit of maths, so if you are not up for that, you can skip the rest.

I will denote by overH(v1, v2) the video constructed from videos v1 and v2 by laying them side by side horizontally. Similarly, I will denote by overV(v1, v2) the video constructed from videos v1 and v2 by laying them side by side vertically.

Again, flipH(v) is the video obtained by flipping the video v horizontally. And flipV(v) flips v vertically.

So you can see that, if v is the live feed from the camera, overH(v, flipH(v)) would place the feed and its mirror image side by side. Call that v1. Then overV(v1, flipV(v1)) constructs the Kaleidescope effect shown in the second example above.

A couple more operations is all we need to construct all the examples shown here. These would be delay(v) which is the video v, delayed by one second. And over(v1, v2) which places a translucent video v2 on top of video v1. So the ghost effect is just over(v, delay(v)). Simple.

This is simple mathematics, although you might think it looks more like programming. It is pure mathematics because every expression denotes an object. Here, all expressions denote videos. The operations (overH, flipH etc.) combine videos to create videos.

But, while it is pure mathematics, it is also programming. It's what is called functional programming. This is how I designed the scripts before I turned them into real programs to achieve the live effects shown here.